🤖 Tech Talk: Google, OpenAI win Math Olympiad medals. At what cost?

Plus: Why voice AI is becoming the talk of the town; AI tool of the week: How to Use GenAI to streamline decisionmaking with scenario-based risk alerts; How much is being invested in emerging tech?

Dear reader,

“I’m not good at math” is a common refrain heard around the world. Until recently, even advanced artificial intelligence (AI) systems could relate. Despite their linguistic prowess, they consistently stumbled on general mathematics problems, particularly those requiring multi-step reasoning. But that’s starting to change.

New breakthroughs suggest AI is becoming increasingly capable—not just at everyday math, but also at solving some of the most-challenging problems posed by the prestigious International Mathematical Olympiad (IMO), long considered a proving ground for the world's sharpest young minds.

Last July, Google DeepMind announced that its systems—AlphaProof, a reinforcement learning-based formal reasoning model, and AlphaGeometry 2, an improved geometry solver—jointly tackled four out of six problems from the 2024 IMO, matching the performance of a silver medalist for the first time.

These models, however, required human experts to translate the natural-language questions into domain-specific formal languages like Lean, and the full process took two to three days of computation.

This July, Google’s latest model, Gemini Deep Think, took a major leap forward. It solved five out of six IMO problems perfectly, scoring 35 points—equivalent to a gold medal—and did so entirely end-to-end in natural language within the 4.5-hour competition window, without any manual translation. The model reads official problem descriptions, reasons through them, and produces formal proofs, all autonomously.

Gemini Deep Think integrates cutting-edge research, including a technique called parallel thinking, allowing the model to explore multiple solution paths simultaneously before selecting the best one, rather than relying on a single linear line of thought.

It was further refined using reinforcement learning on multi-step reasoning and theorem-proving tasks and trained on a curated dataset of high-quality mathematical solutions. The team also embedded strategic hints and IMO-specific problem-solving advice into its prompts.

Google plans to release a version of Gemini Deep Think to select mathematicians and trusted testers ahead of a broader rollout to Google AI Ultra subscribers.

On July 19, OpenAI revealed that its models were tested on the 2025 IMO problems under real exam conditions: two 4.5-hour sessions, no tools or internet access, and using only official problem statements. The models responded with natural-language proofs, showcasing OpenAI’s progress in math reasoning using its GPT-4 series.

OpenAI researcher Alexander Wei said on X: "In our evaluation, the model solved 5 of the 6 problems on the 2025 IMO. For each problem, three former IMO medalists independently graded the model’s submitted proof, with scores finalized after unanimous consensus. The model earned 35/42 points in total, enough for gold!"

A pinch of salt

The achievements haven’t been without controversy. Critics have questioned both Google and OpenAI for not disclosing the amount of computing power used to reach these results.

OpenAI, in particular, drew flak for allegedly “stealing the spotlight from kids” by announcing its performance before the IMO closing ceremony, rather than allowing human contestants their moment.

Adding to the tension, a public sparring match has erupted between Google and OpenAI, raising doubts whether the exercise was about maths prowess or one-upmanship?

Google DeepMind researcher Thang Luong and former OpenAI CTO Mikhail Samin pointed out that OpenAI’s model wasn’t evaluated using the IMO’s official scoring criteria, making its gold medal claim difficult to verify.

“Yes, there is an official marking guideline from the IMO organizers which is not publicly available. Without evaluation based on that, no medal claim can be made,” Luong posted on X. “With one point deducted, it’s a silver, not gold.”

Incidentally, India secured three gold medals, two silver, and one bronze at the 66th IMO held in Australia this month. The first IMO was held in 1959 in Romania with seven countries but has now expanded to over 100 countries from five continents.

Also read:

How voice AI is coming of age

Voice AI has emerged as the latest battleground in the race to define how humans interact with machines, according to a new CB Insights report.

Meta’s recent acquisition of PlayAI and the $371 million in equity funding raised so far this year—already matching the full-year 2024 total—signal growing investor and Big Tech conviction that voice will become the dominant interface for AI, replacing traditional browser and mobile interactions with more natural, conversational experiences, according to the report.

Advances in the field are making this shift increasingly feasible. Voice AI systems can now respond in under 300 milliseconds, or less than a third of a second, after you speak—fast enough to feel like a real conversation. This leap in responsiveness is key to making voice interactions feel seamless.

As Chris McCann of Race Capital, a PlayAI backer, puts it: “Voice is how people naturally communicate—but most voice AI systems still sound robotic or lag behind. We believed fast, expressive voice tech would be critical to making AI feel human and useful, especially in enterprise settings like IVR (interactive voice response), customer support, and sales.”

With voice becoming a core modality of the AI future—and tech giants rushing to win the AI device race—owning the foundational tech that enables human-AI communication is now mission-critical. A wave of acquisitions is expected as firms move to lock in voice AI capabilities.

CB Insights, using its Mosaic score to evaluate company health, has flagged the most promising M&A targets in the space—and why they’re in such high demand.

What about India?

As per Tracxn, there currently are 67 speech and voice recognition startups in India, including Avaamo, Sarvam, Yellow.ai, Gnani.ai, and Skit.ai. Of these, 31 startups are funded, with eight having secured Series A+ funding.

This year has seen the creation of two speech and voice recognition startups in India. Over the past 10 years, an average of four new companies have been launched annually. Notably, several of these startups have been founded by alumni of BITS Pilani, IIT-Kharagpur and IIT-Bombay.

AI Unlocked

by AI&Beyond, with Jaspreet Bindra and Anuj Magazine

This week we have unlocked: How to use GenAI to streamline decisionmaking with scenario-based risk alerts.

What problem does it solve?

C-level leaders struggle to monitor risks across major projects, like supply chain disruptions or cybersecurity threats, while handling strategic decisions. Manually tracking internal updates in Google Drive and external signals from news or X posts is time-consuming and risks missing critical issues. This delays responses, potentially causing project setbacks or missed opportunities. Leaders need a streamlined, automated solution delivering clear, actionable risk assessments with mitigation suggestions, enabling faster, informed decisionmaking.

Google Gemini’s new capability

Scheduled Actions: Automates recurring or one-time tasks, delivering timely insights via email or notifications, pulling data from Google Drive and external sources for proactive management.

How to access: https://gemini.google.com/

Google Gemini’s Scheduled Actions can help you:

- Automate risk monitoring: Schedule weekly risk assessments for key projects, integrating internal and external data.

- Stay proactive: Receive timely alerts with mitigation strategies to address issues before they escalate.

- Save time: Eliminate manual data aggregation, freeing focus for strategic priorities.

Example:

Imagine a CEO overseeing a global product launch. Supply chain delays or negative social media sentiment could derail the project. Here’s how to set up Scheduled Actions for risk monitoring:

- Open the Gemini app or visit gemini.google.com.

- In the prompt box, enter: “Every Tuesday at 10 AM, review my Google Drive folder ‘Product Launch’ for updates and scan X and news for risks like supply chain issues. Summarize risks to my top three initiatives with mitigation suggestions, and email the report.”

- Click/tap Submit; Gemini confirms the action.

- Edit if needed via Profile Menu (mobile) or Settings & Help (web) > Scheduled Actions.

Each week, Gemini emails a report flagging, for example, a supplier delay on X, suggesting alternative vendors.

What makes Google Gemini’s Scheduled Actions special?

- Deep Google integration: Seamlessly pulls data from Gmail, Drive, and Calendar for contextual insights.

- Flexible scheduling: Supports one-time or recurring tasks (daily, weekly, monthly) with a 10-action limit.

- Proactive delivery: Results arrive via push notifications, in-chat messages, or email, tailored to your workflow.

Note: The tools and analysis featured in this section demonstrated clear value based on our internal testing. Our recommendations are entirely independent and not influenced by the tool creators.

Also read:

In numbers

2.5 billion

Number of daily prompts that ChatGPT users send globally

330 million

Daily prompts are in the US, according to an article in Axios

500 million

Weekly active users of the free version of ChatGPT

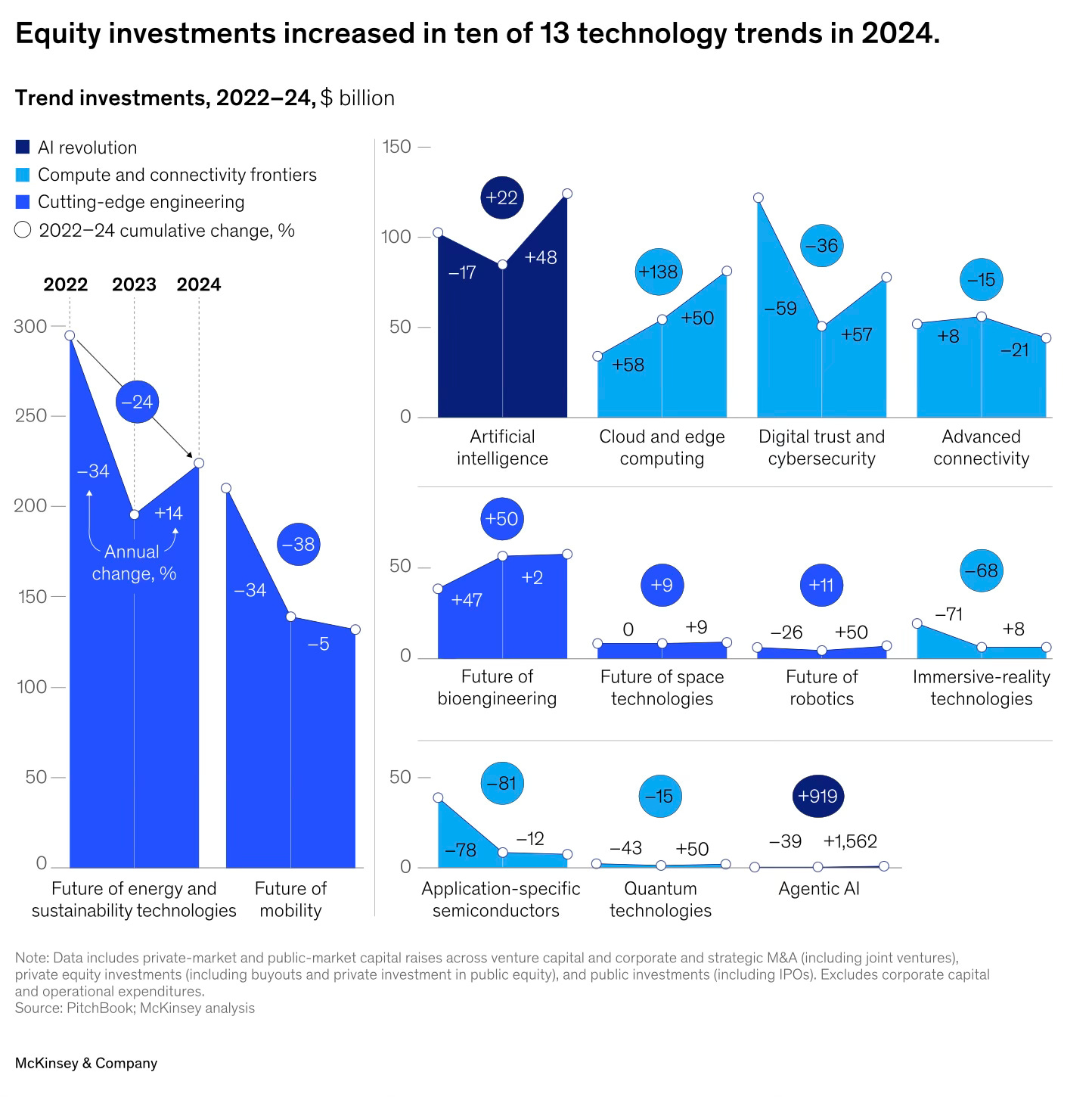

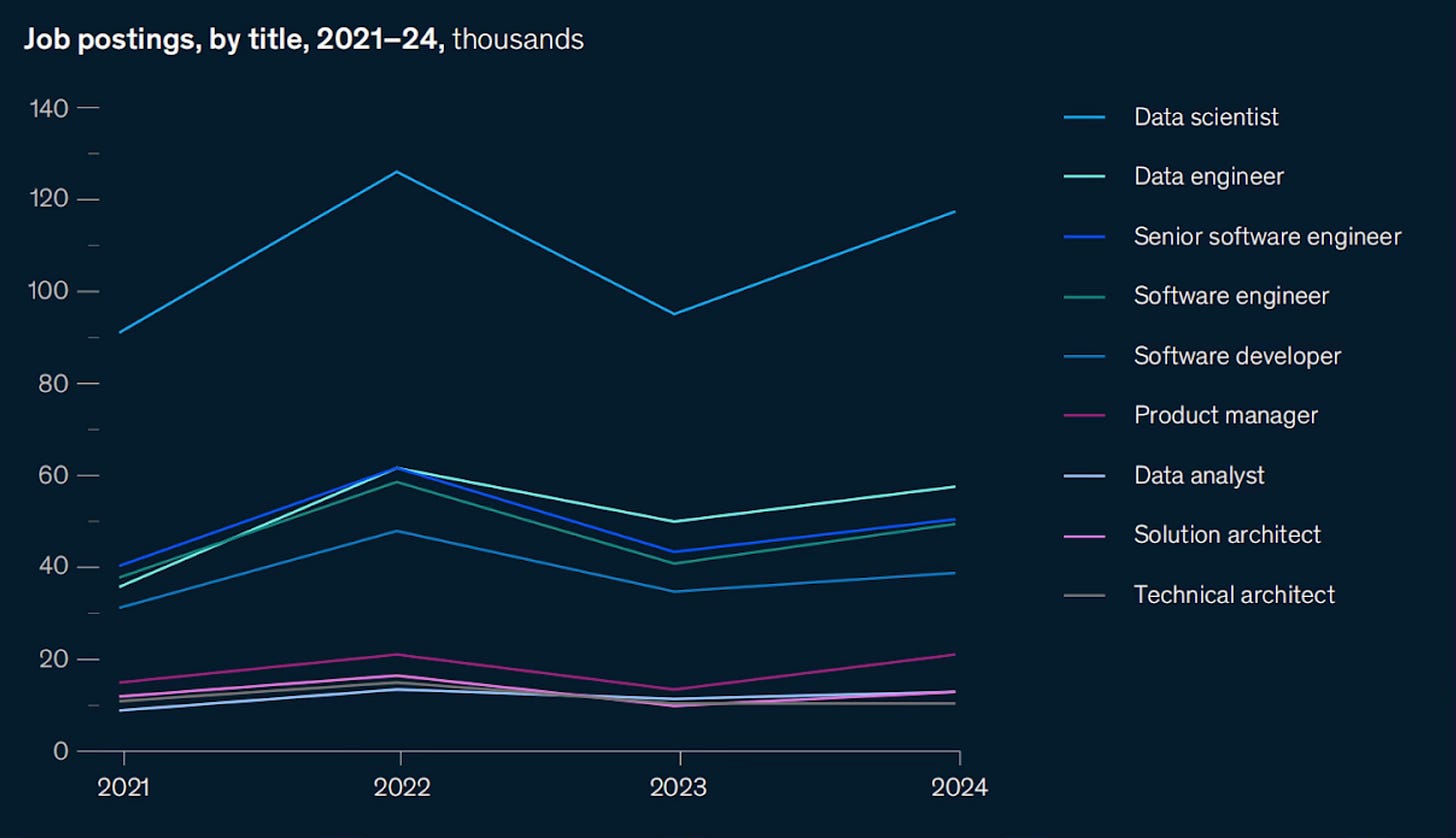

In charts

You may also want to read

Big move to fight AI deepfakes: Here’s how Denmark plans to protect you

Apple needs to leave its ‘comfort zone’ on AI. Elon Musk could help.

SoftBank and Open AI’s $500 billion AI project struggles to get off ground

Don’t be naive, Agentic AI won’t eliminate agency costs

Is OpenAI exaggerating ChatGPT Agent's powers?

Google officially confirms Pixel 10 Pro design: What to expect?

SoftBank-OpenAI’s Stargate project hits roadblock

Hope you folks have a great weekend, and your feedback will be much appreciated — just reply to this mail, and I’ll respond.

Edited by Feroze Jamal. Produced by Shashwat Mohanty.