🤖 Tech Talk: 40 white-collar jobs AI is most likely to steal. Is yours on the list?

Plus: GPT-5 is here. Now what?; Who's really learning when AI attends schools and colleges?; AI agents, AI-ready data take centrestage in 2025, says Gartner; and more...

Dear reader,

After months of anticipation, OpenAI on Thursday officially launched GPT-5, calling it “our best AI system yet” and “our strongest coding model to date”. OpenAI says GPT-5 marks a “substantial leap in capability” across the board—coding, maths, writing, health, visual understanding, and more.

GPT-5 is free for all users, though Plus subscribers get higher usage limits, and Pro subscribers gain access to GPT-5 Pro—an upgraded version offering extended reasoning for deeper, more accurate responses. GPT-5 mini is a faster, more cost-efficient version of GPT-5, tailored for "well-defined tasks and precise prompts". GPT-5 Nano OpenAI's "fastest, cheapest version of GPT-5".

GPT-5 is also available on all Microsoft platforms, including Microsoft 365 Copilot, Copilot, GitHub Copilot, and Azure AI Foundry. "It's the most capable model yet from our partners at OpenAI, bringing powerful new advances in reasoning, coding, and chat, all trained on Azure," Satya Nadella, chairman and CEO of Microsoft, posted on X.

Cognitive scientist Gary Marcus acknowledges GPT-5 is "good progress on all fronts" but "obviously not AGI (artificial general intelligence)", and "not a giant leap forward (e.g. Grok 4 beats it on ARC-AGI-2 results). He adds, "Pricing is good, but profits may continue to be elusive; still no clear technical moat."

Ethan Mollick, professor of management at Wharton, believes "GPT-5 is a big deal". He notes: "GPT-5 just does stuff, often extraordinary stuff, sometimes weird stuff, sometimes very AI stuff, on its own. And that is what makes it so interesting." Mollick, however, underscores that free subscribers (unlike those with premium subscriptions) cannot directly select the more powerful models, such as the GPT-5 Thinking, thus putting them "at the mercy of GPT-5’s model selector".

Key features:

1. Smarter architecture: GPT-5 is the new default in ChatGPT, replacing all earlier versions. Paid users, though, can continue to select “GPT-5 Thinking” from the model picker. At the heart of GPT-5 is a smart routing system that decides, in real time, whether to use the standard model or GPT-5 Thinking—a more powerful version for complex queries. In other words, for free users, GPT-5 will decide which model.

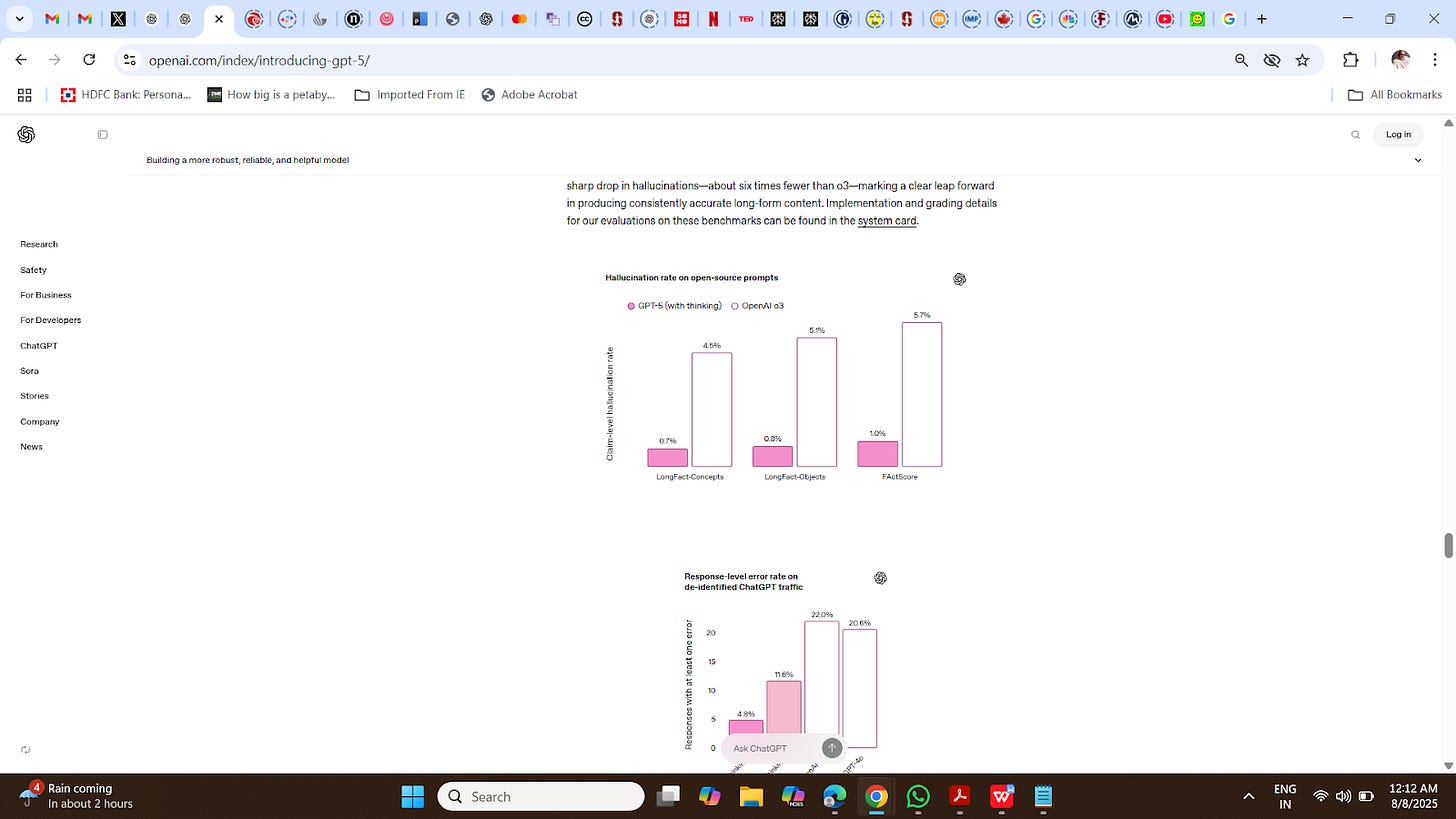

2. Fewer hallucinations: More than just benchmark gains, GPT-5 is significantly more useful in real-world scenarios. It’s faster, more accurate, and better at following instructions. OpenAI has also made strides in reducing hallucinations, sycophancy, and inconsistency—especially across ChatGPT’s top use cases: writing, coding, and health. OpenAI says GPT-5 "is significantly less likely to hallucinate than our previous models".

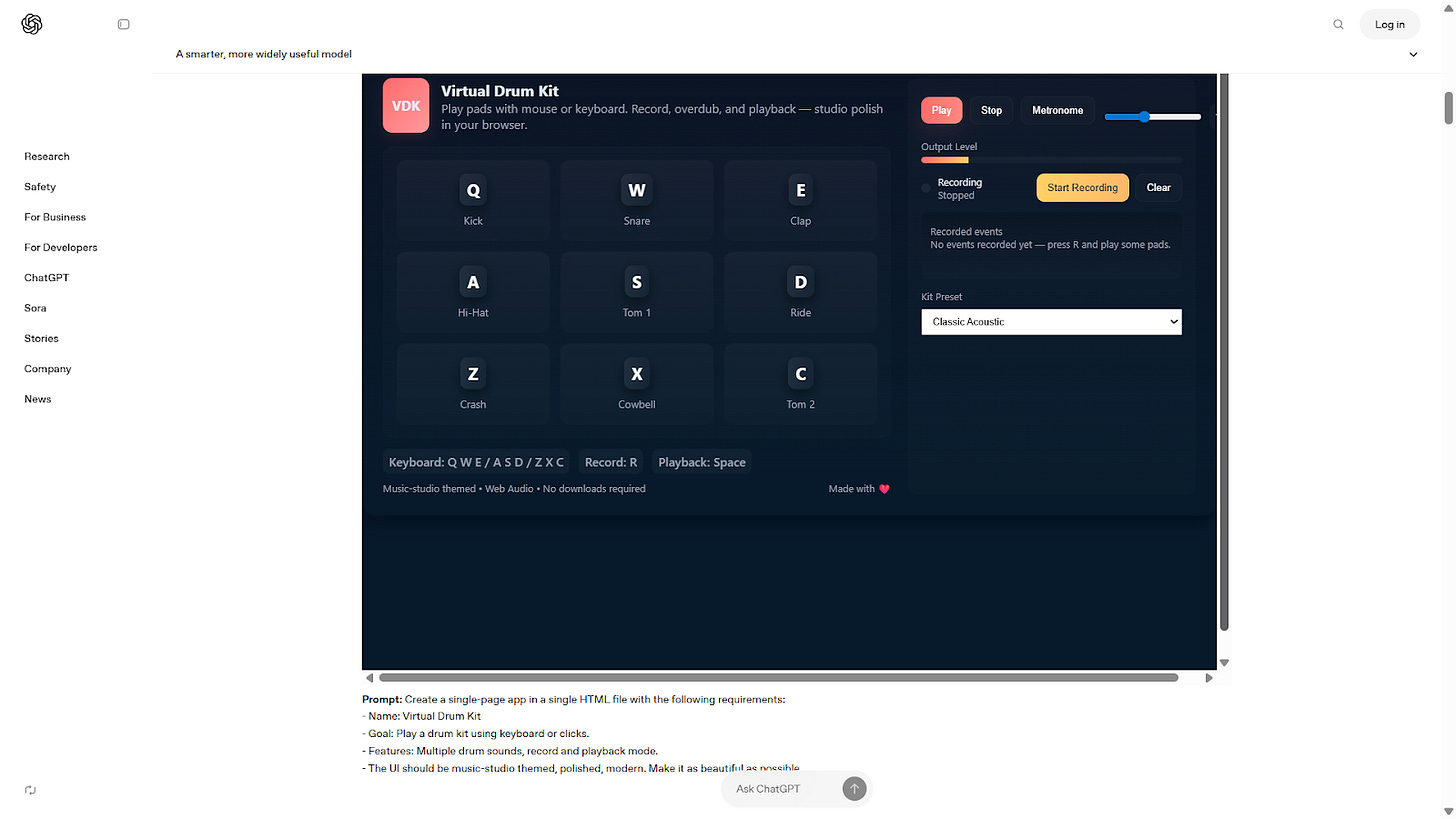

3. Enhanced coding: OpenAI says GPT-5 excels at front-end development, debugging large codebases, and even designing fully functional websites, games, and apps—often from a single prompt.

Early testers have praised its aesthetic sensibility, noting its improved grasp of design elements like spacing, typography, and layout, which results in cleaner, more intuitive output.

4. Improved writing: GPT-5 is "our most capable writing collaborator yet". According to OpenAI, GPT-5 handles writing that involves structural ambiguity, such as sustaining unrhymed iambic pentameter or free verse that flows naturally, combining respect for form with expressive clarity. These improved writing capabilities mean that ChatGPT is better at helping you with everyday tasks like drafting and editing reports, emails, memos, and more.

5. Health: GPT-5 scores significantly higher than any previous model on HealthBench, an evaluation OpenAI published earlier this year based on realistic scenarios and physician-defined criteria.

6. Smarter, safer responses: With GPT-5, OpenAI has introduced a new safety training approach called “safe completions”. This method trains the model to provide the most helpful response possible—while staying within established safety limits. When GPT-5 needs to decline a request, it’s designed to do so transparently, explaining the reason and offering safer, alternative responses whenever possible.

7. More transparency and honesty: Along with better factual accuracy, GPT-5 Thinking is also more transparent about what it can and cannot do. For tasks that are impossible, unclear, or require unavailable tools, the model is now better at clearly communicating those limitations. In fact, deception rates have been cut significantly—from 4.8% in GPT‑4o to just 2.1% in GPT‑5 Thinking responses, based on real-world ChatGPT usage data.

8. Can disagree: GPT-5 is also less prone to being overly agreeable or excessively enthusiastic. It uses fewer unnecessary emojis, follows up more thoughtfully, and takes a more nuanced tone. The overall experience feels more like interacting with a knowledgeable, thoughtful human—rather than a chatbot trying too hard to please.

9. Preset personalities: To give users more control over tone and style, OpenAI is launching a research preview of four preset personalities for ChatGPT. These steerability improvements allow users to set how ChatGPT responds—whether concise and professional, warm and supportive, or even a bit sarcastic. The initial options—Cynic, Robot, Listener, and Nerd—are available in text mode now, with support for Voice coming soon. These are optional, adjustable anytime in settings, and designed to reflect your preferred communication style without needing to write custom prompts.

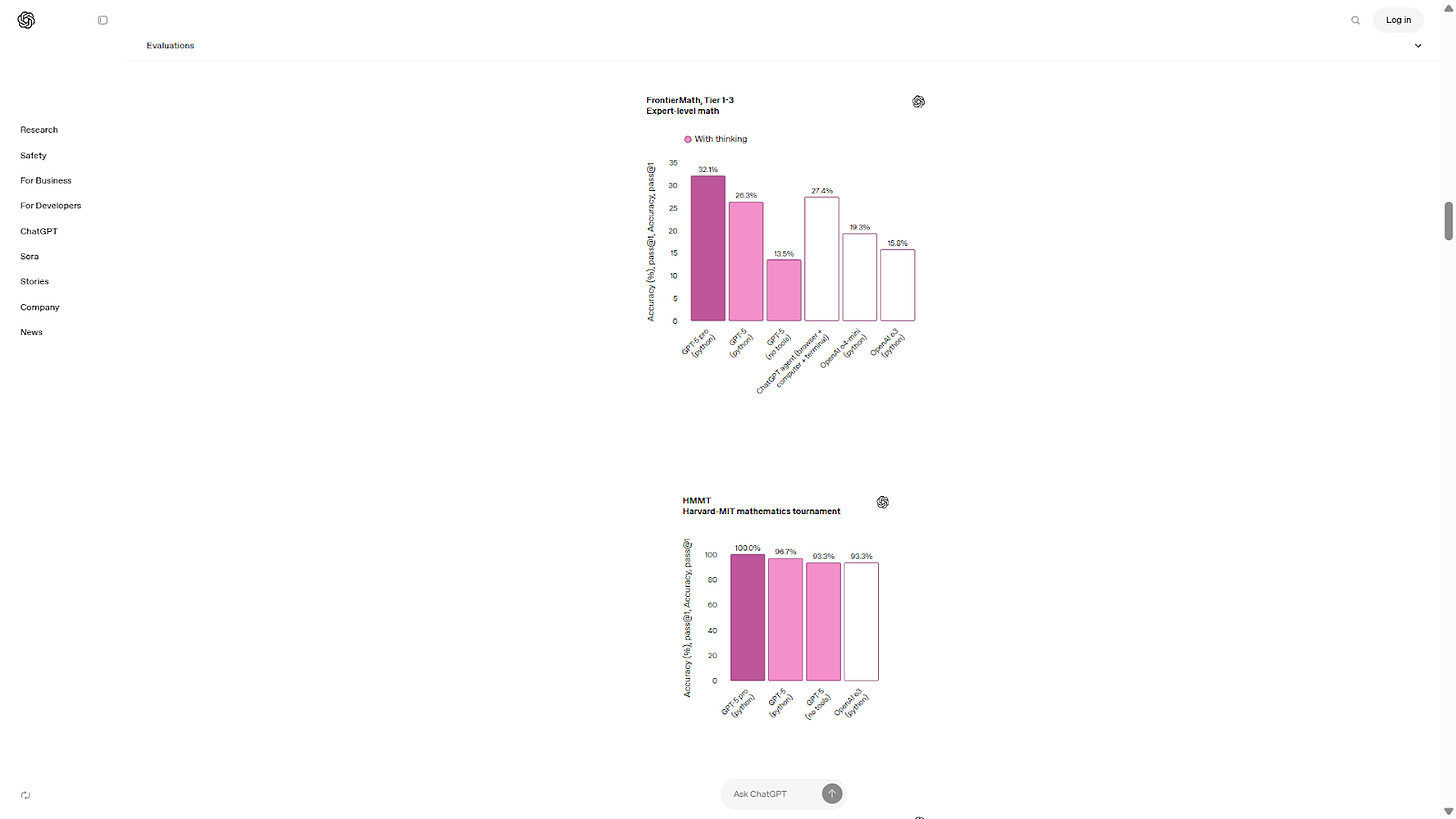

10. Performance: GPT-5 Pro, which replaces the previous o3-pro model, is a high-performance variant that uses extended reasoning and scaled, efficient compute to deliver the most thorough and accurate answers. It sets a new standard on multiple difficult intelligence benchmarks, including achieving best-in-class results on GPQA, a dataset of challenging science questions. In a test of over 1,000 real-world, high-value reasoning prompts, external reviewers preferred GPT-5 Pro’s responses over GPT-5 Thinking in 67.8% of cases. It made 22% fewer major errors and excelled particularly in health, science, math, and coding—earning high marks for relevance, clarity, and depth.

You may also read: 'GPT-5 is coming, but can OpenAI retain its edge?’

OpenAI launches gpt-oss-120b and 20b models

The new models — GPT-oss-120b and 20b — launched this week, are free for anyone. The larger gpt-oss-120b model is optimized to run on a single high-end GPU with 80 GB of memory, while the smaller 20B version can operate on systems with just 16 GB of RAM. This means advanced AI can now run locally on personal computers, workstations, or self-hosted servers—eliminating the need for cloud-based platforms.

For the first time in years, powerful instruction-following AI models are accessible to anyone—developers, researchers, and hobbyists alike—without paid access or costly infrastructure. This shift could democratize AI development, enabling individuals and organizations to create AI-powered tools for writing, data analysis, or even basic scientific and medical applications—all on their own hardware.

Tracing the timeline: When ChatGPT was launched in November 2022, it was running on GPT-3.5. Five months later, OpenAI released GPT-4, marking a big leap in intelligence. A year later, in March 2024, it introduced GPT-4o, improving its capabilities nearly across the board and introduced multimodality. In February, Altman said GPT-5 will unify o3 and other technologies, eliminating standalone o3. GPT-5 will be free for ChatGPT users at standard intelligence, while Plus and Pro subscribers will be able to access more advanced versions. Features will include voice, canvas, search, and deep research.

Gaining traction: Incidentally, OpenAI has confirmed that ChatGPT is all set to hit 700 million weekly active users for the first time this week. The AI company states that its weekly active users have risen four times from the same time last year and it is a significant improvement over the 500 million mark at the end of March. The 700 million users figure includes all ChatGPT users including free, Plus, Pro, Enterprise and Edu customers.

Who's really learning when AI attends schools and colleges?

Artificial intelligence (AI) tools have made their way into classrooms, sparking both enthusiasm and concern among educators and students. A growing number of companies are rolling out AI-powered tutors designed to help students learn without human teachers. At the same time, some educators are using AI to draft lesson plans, worksheets, or parent communications.

An informal survey of 500 Singaporean students—from secondary school to university—conducted by a local news outlet this year found that 84% were using tools like ChatGPT weekly to complete homework. In China, universities are turning to AI to detect cheating, even as dozens of companies market apps that promise to generate undetectable essays and homework using advanced AI. A March 16 article, 'There's a Good Chance Your Kid Uses AI to Cheat', revealed how one New Jersey high school senior relied on AI to complete her English, math, and history assignments last year.

Students have also voiced frustration online, taking to platforms like Rate My Professors to complain about educators who rely heavily on AI. Many argue that this undercuts the value of their tuition, which is meant to pay for human instruction—not tools they can access for free. In one recent case, Ella Stapleton, a business student at Northeastern University, discovered her professor was using AI to write lecture notes. She reported it to the administration and demanded a refund of her $8,000 tuition fee. The university declined. “I was like, wait… did my professor just copy-paste a ChatGPT response?” Stapleton told The New York Times.

While many schools either outright ban or put restrictions on the use of AI, Northeastern says it welcomes the use of AI to enhance all aspects of its teaching, research, and operations but "in a manner that is consistent with university policies and applicable laws, protects our confidential information, personal information, and restricted research data, and appropriately addresses any resulting risks to the university and our community".

Last October, another case involved a student who was failed by Jindal Global Law School that accused him of using AI to generate 88% of their exam content. The student, Kausttubh Shakkarwar, who is pursuing a Master of Law (LLM) in Intellectual Property and Technology Laws at the Jindal Global Law School, sued the college on grounds that there is no specific restriction on using AI-generated content.

A month later, the parents of a Massachusetts high school senior filed a lawsuit against his teacher, school district officials, and the local school committee, claiming their son was unfairly punished for using AI tools to research and outline a history essay. Filed in Massachusetts district court, the suit argues that the student violated no existing rules but was penalized with detention, a low grade, and disqualification from the National Honor Society—putting him at a disadvantage in the competitive Ivy League admissions process. The complaint notes that Hingham High School only added its AI policy to the student handbook the following year.

These cases nothwithstanding, one can argue that even before tools like ChatGPT, which was launched in November 2022, many students would outsource their papers to writing outlets globally. And tools like Grammarly support "streamlined and effective writing".

Recent research from MIT reveals that ChatGPT can either enhance learning or hinder it, depending on how students use it.

The study found that high-competence learners used the tool to rephrase, revisit, and connect ideas—actively engaging with the material. Brain scans showed they were deeply focused and expended less mental energy on unproductive effort.

In contrast, lower-competence learners often used ChatGPT to shortcut their thinking, relying on quick answers without fully processing or linking concepts. Their brain activity reflected shallow engagement and minimal cognitive effort.

The key takeaway: ChatGPT isn’t inherently harmful—it can be a powerful learning aid if used thoughtfully, but it also makes it easy to bypass the hard work that leads to lasting understanding. The tool should support critical thinking, not replace it.

Parents and educators, therefore, have a vital role in helping students see that even if ChatGPT-generated work passes undetected, it robs them of the chance to truly learn, think independently, and develop creativity—skills essential for life beyond school.

Also read:

AI agents, AI-ready data take centrestage in 2025, says Gartner

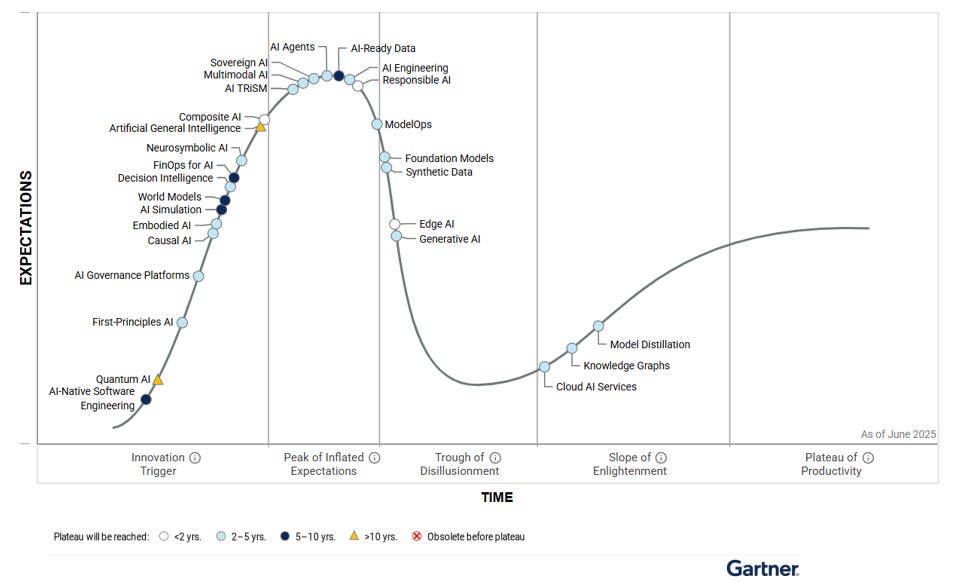

AI agents and AI-ready data are the two fastest-advancing technologies on the 2025 Gartner Hype Cycle for Artificial Intelligence, released this month. Gartner’s Hype Cycles offer a visual framework for tracking the maturity and adoption of emerging technologies and their potential to solve real-world business challenges.

Gartner also highlighted multimodal AI and AI TRiSM as key innovations expected to achieve mainstream adoption within 5 years and drive the next wave of responsible, scalable, and transformative AI applications across industries.

AI Agents: These are autonomous or semi-autonomous software systems that use AI—often including large language models (LLMs)—to perceive, decide, and act within digital or physical environments. Organisations are increasingly deploying these agents to handle complex tasks. Gartner recommends that "to benefit from AI agents, businesses must identify the most relevant contexts and use cases—no easy task, since every agent and situation is different”.

AI-ready data: It refers to datasets optimised for AI use, improving both accuracy and efficiency. Readiness depends on the data’s contextual fitness for specific AI techniques and use cases, pushing organisations to rethink traditional data management approaches.

According to Gartner, scaling AI requires evolving data capabilities to meet current and future demands, ensure trust, reduce bias and hallucinations, and protect intellectual property and compliance.

Multimodal AI: These models process and integrate multiple data types—text, images, audio, and video—allowing them to better interpret complex situations than single-mode systems. This broadens their usefulness across a wide range of applications. Gartner predicted that multimodal AI will become central to the evolution of software and applications across all industries in the next five years.

AI TRiSM: Standing for AI Trust, Risk, and Security Management, AI TRiSM ensures that AI systems are deployed responsibly and securely. It encompasses four layers of technical capabilities that enforce enterprise policies across AI use cases, supporting governance, fairness, safety, reliability, privacy, and data protection.

AI Unlocked

by AI&Beyond, with Jaspreet Bindra and Anuj Magazine

Today’s AI hack: Google NotebookLM's Video Overview feature.

What problem does it solve?

Most learners struggle with processing dense research materials, lengthy documents, and complex information scattered across multiple sources. But the deeper issue is that everyone learns differently.

Visual-spatial learners get lost in walls of text and need to see information organised graphically. Sequential learners struggle when information jumps around without a clear logical progression. Many learners need both auditory and visual input simultaneously to truly retain information, something traditional text-only materials simply can't provide.

Traditional study methods force learners to adopt a one-size-fits-all approach: read everything, take notes, and try to synthesise manually. This often leads to information overload, poor retention, and difficulty grasping connections between concepts.

Google NotebookLM's recent feature, Video Overview, addresses these fundamental learning style mismatches by transforming text-heavy materials into multimodal presentations that work for different types of learners.

How to access: https://notebooklm.google.com/

Google NotebookLM's Video Overview can help you:

- Create presentation-ready content: Generate professional-looking videos from raw research materials

- Synthesise multiple sources: Combine research papers, articles, and notes into one coherent presentation

- Support different learning styles: Engage both visual and auditory learners simultaneously while accelerating comprehension

Example:

Say you're researching AI ethics for a project and have collected 10 research papers, several blog posts, and your own notes. Here's how Video Overview can help:

- Upload sources: Add all your PDFs, articles, and notes to a NotebookLM notebook.

- Generate video: Select "Video Overview" and let the AI process your materials.

- Review output: Get a structured video presentation with slides, charts, and narration that synthesises your sources.

- Learn efficiently: Absorb key concepts, connections, and insights in a fraction of the time it would take to read everything.

What makes Video Overview special?

- Multimodal learning: Combines visual slides with narration for enhanced retention.

- Automatic synthesis: No need to manually organise information. AI finds patterns and connections.

- Professional quality: Generates presentation-ready content without design skills.

Known limitations

Processing takes longer than audio overviews, works best with text-heavy documents, and offers limited visual customisation options.

Note: The tools and analysis featured in this section demonstrated clear value based on our internal testing. Our recommendations are entirely independent and not influenced by the tool creators.

Also read:

You may also want to read

40 white-collar jobs AI is most likely to steal: Is yours on the list?

A new Microsoft study gives us an insight into which jobs are at the most risk due to artificial intelligence. The researchers analysed 200,000 conversations between Microsoft's Copilot AI and users to find the most common activities for which people use AI.

The study found knowledge workers such as historians, writers and authors, CNC tool programmers, brokerage clerks, political scientists, reporters and journalists facing the highest risk. Moreover, the researchers said having a college degree may not help protect these jobs from being eliminated. Read more.

AI is coming for the consultants. Inside McKinsey, ‘This is existential.’

Elon Musk reveals why AI won’t replace consultants anytime soon

‘Screwed up’: Sam Altman warns against using ChatGPT as your lawyer or therapist

'ChatGPT is toxic': Woman opens up about emotional dependency on AI, says she had to delete it

OpenAI, Google, and Anthropic get green light for civilian AI use in the US

Hope you folks have a great weekend, and your feedback will be much appreciated — just reply to this mail, and I’ll respond.

Edited by Feroze Jamal. Produced by Shashwat Mohanty.